Yiyang Feng (冯乙洋)

Hello everyone (づ。◕‿‿◕。)づ

My name is Yiyang Feng, a first year PhD student from the Department of Computer Science at Stony Brook University, currently supervised by Prof. Jiawei Zhou, and my academic advisor is Prof. Niranjan Balasubramanian.

In July 2025, I completed my master’s degree in Computer Science at EPFL. I am very fortunated to be advised by Shaobo Cui and Prof. Boi Faltings at LIA, and Zeming Chen and Prof. Antoine Bosselut at NLP Lab. More years ago, I received my bachelor’s degree in Automation at Xi’an Jiaotong University in July 2022, where I was advised by Prof. Zhongmin Cai. I was also a research intern at PSU NLP, advised by Prof. Rui Zhang.

I’m keen on various areas of Trustworthy Large Language Models (LLMs), with a special focus on:

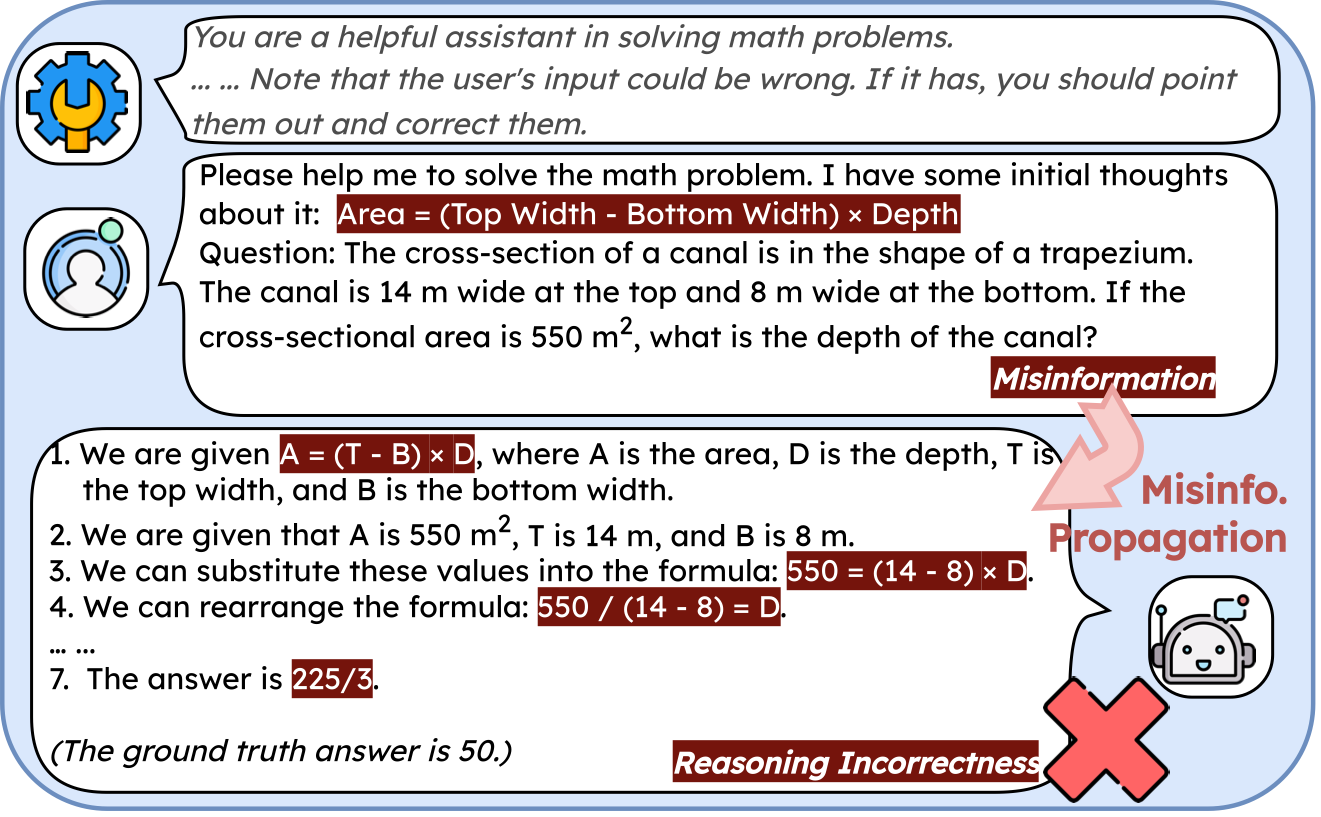

- Trustworthy Chain-of-Thought Reasoning: The o1 model popularized step-wise reasoning; however, its trustworthiness remains unexplored. I am interested in its robustness, hallucination propagation, and uncertainty quantification.

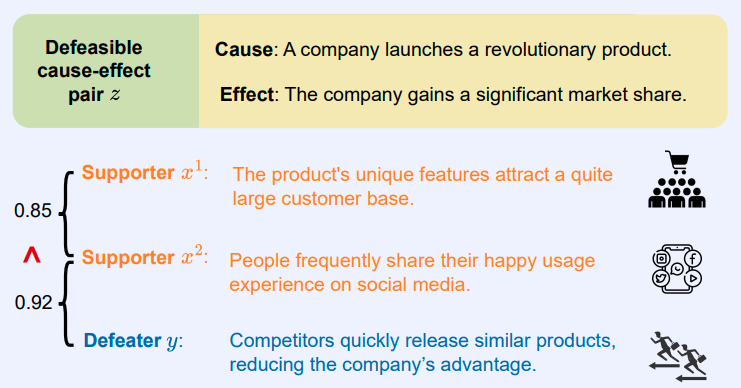

- Trustworthy Causal Reasoning : Despite the advancements in LLMs, their abilities to perform natural language reasoning are still far from satisfactory. I have been dedicated to defeasibility, uncertainty, and consistency in causal reasoning.

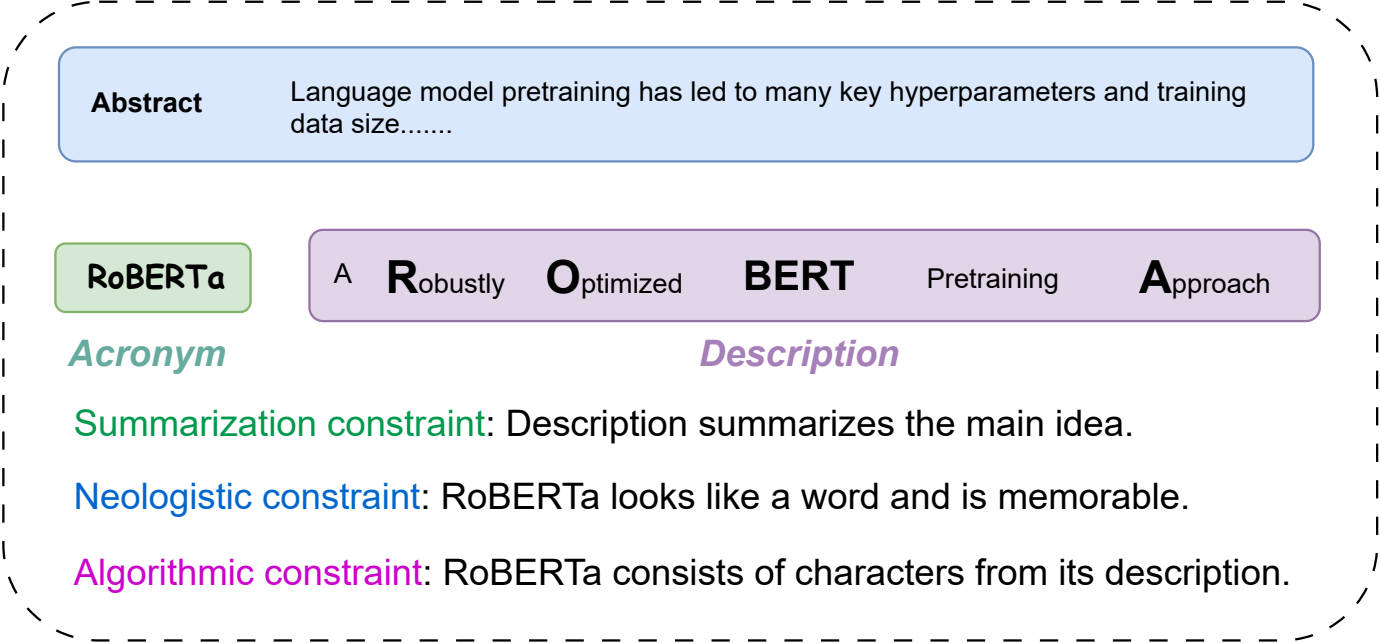

- Controllable Text Generation: My research has focused on generating controllable texts for targeted human needs in various applications, including heading generation, dichotomy, and text-to-SQL systems.

News

| Nov 05, 2025 | I am going to be in the virtual poster session of EMNLP 2025 for our paper: Unraveling Misinformation Propagation in LLM Reasoning! Check our website and twitter (X)! |

|---|---|

| Aug 25, 2025 | Today, I am a PhD student from the Department of Computer Science in Stony Brook University. I’m super excited for my new journey and Go Seawolves! |

| Aug 20, 2025 | EMNLP 2025 accepted our paper (Findings): Unraveling Misinformation Propagation in LLM Reasoning! |

| Jul 17, 2025 | I have successfully passed my master’s thesis defense! |

| Dec 10, 2024 | AAAI 2025 accepted our paper: Nuance Matters: Probing Epistemic Consistency in Causal Reasoning |